Artificial Intelligence based chip design: Chip Design

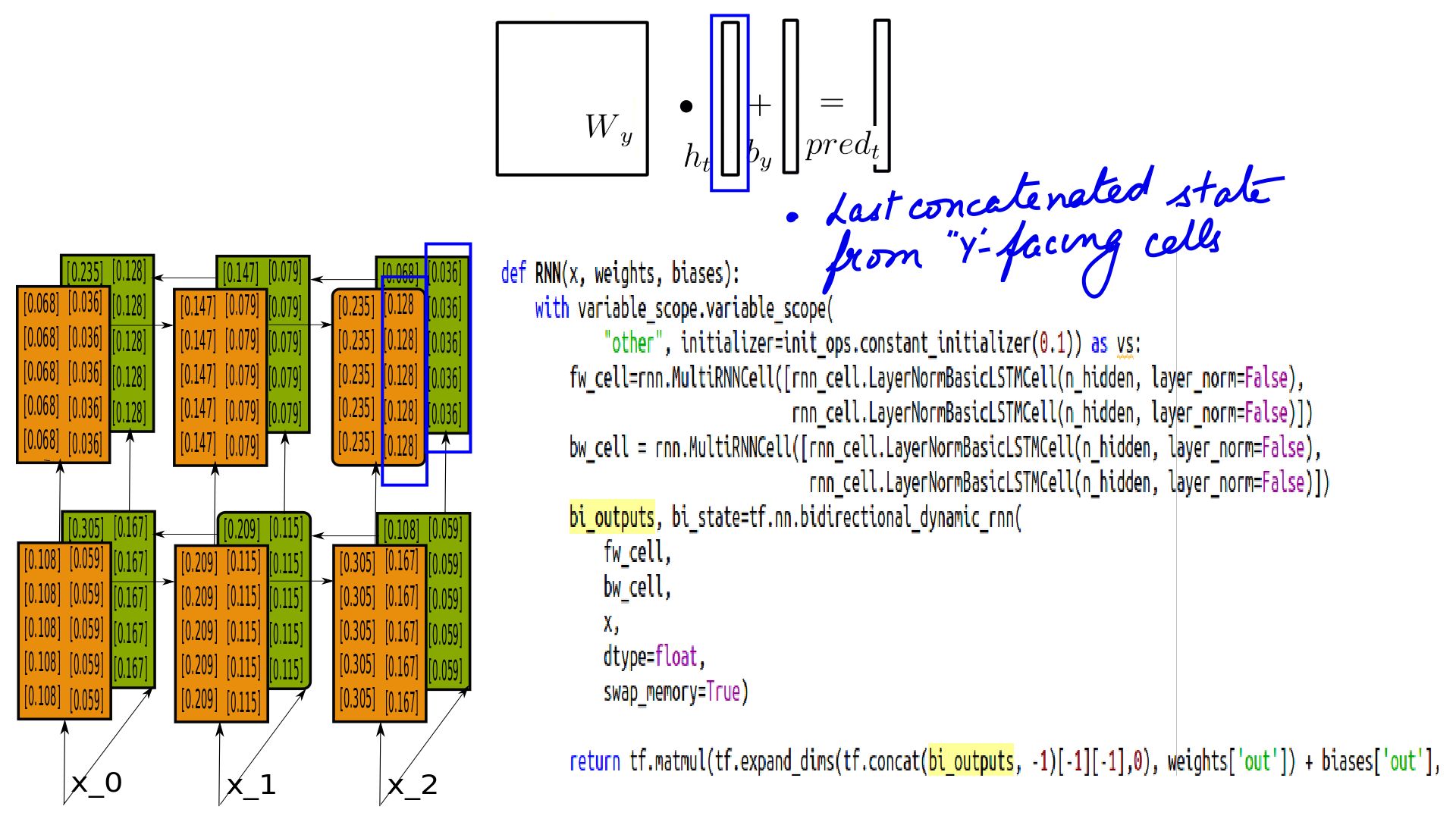

This is a departure from my usual tech blog. In the current series titled Artificial-Intelligence-based-chip-design, I/we present the use of Artificial Intelligence to design FPGA chips for Ultra-Low-Latency based speech transformers. This showcases our products as well as our skills in the respective domains. This started as an experiment to lower latency for a seq-to-seq LSTM based speech synthesis system. Post a thorough analysis, we broke it down into 1. mapping the matrix multiplication of deep learning transformers into an FPGA chip(Transformers On Chip(part-1)) and 2. co-designing the FPGA chip for the appropriate workload(ChipDesign(part-2)). In part-2 we treat the resources on FPGA chip, Workload, other design parameters as design variables. Finally, we feed the design variables into the Deep Reinforcement Learning agent to learn. Post learning the expectation from Deep Reinforcement Learning agent being the optimal placement of blocks to maximize certain goals like latency, etc. The Deep Reinforcement Learning Algorithm is supposed to figure out a balance that speeds up computation for tolerable accuracy losses(if at all).To use a boxing term, pound for pound, the same hardware micro-designed by a Deep Reinforcement Learning Agent for carrying a specific load.